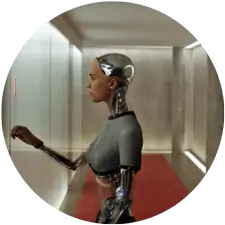

Artificial Intelligence

Page last updated 22 Sep 2024

Is AI (artificial intelligence) becoming so advanced that we will soon have machines that can surpass human intelligence? And might this spell the end of human beings as the dominant intelligence on this planet? Some people, such as Stephen Hawking and Bill Gates, have warned of the possible dangers of AI, see, for example Open Letter, The Future of Life Institute, PDF AI research priorities document, BBC: Stephen Hawking warns artificial intelligence could end mankind.

Yes, it may happen, but is it really so regrettable from the long term viewpoint? We are all going to die sooner or later, so if we are regretting that advanced machines may take over from humans in the future, we are regretting that the future is not going to be the way we wanted it or the way we always assumed that it would be. But we don’t really know how the future is going to turn out anyway. Perhaps if machines take over then the earth may not end up with an overpopulated humanity using up the finite resources of earth, where a likely scenario is a massive increase in wars, disease and starvation. In that case, in my opinion, the scenario where machines have taken over and use the earth’s resources sensibly would be preferable.

In the short term a more likely scenario is perhaps where a human’s intelligence and memory could be boosted by implanting some sort of artificial intelligence booster module. And that raises the question, who is going to be able to afford these devices initially? Only the very rich. And once they have them, surely they will do everything they can to prevent everyone else having them. And then we will truly have a two-tiered humanity where the rich, as well as being rich, are also far more intelligent - and as a result probably much better at keeping themselves far richer than the common masses.

If that happens, it won’t be very pleasant being a non-rich person, and over time, the rich will eliminate the non-rich, except where they will be useful as servants where for certain activities their utilization turns out to be cheaper than using a machine.

Is this a good thing, or a bad thing? There really isn’t a single answer to this - if you are rich enough to afford the latest AI booster implant module, you will think it’s great - and if you can’t, you will think that the whole thing stinks. But it’s hard to say whether or not the end result would be an earth that would be a better place for life in general.

An interesting aspect regarding the possibility of advanced AI is what goals/

However, to instill a desire in an AI machine to follow a rather more nebulous notion such as benefiting the overall interests of mankind would be a completely different matter. It will probably be the case that the first major problems with advanced AI will result from a human designer creating AI which has goals which will benefit the designer, but which will be to the detriment of the overall interests of mankind. The problem in trying to prevent unwanted AI behavior is the problem of defining which goals of AI would be desirable to humankind, and which would be undesirable. As AI becomes more complex, humans will lack the capacity to decide between the two - and it would seem inevitable that some sort of ‘survival of the fittest’ will eventually decide the outcome of advanced AI, rather than human legislation.

Other Posts

Rationale: Every logical argument must be defined in some language, and every language has limitations. Attempting to construct a logical argument while ignoring how the limitations of language might affect that argument is a bizarre approach. The correct acknowledgment of the interactions of logic and language explains almost all of the paradoxes, and resolves almost all of the contradictions, conundrums, and contentious issues in modern philosophy and mathematics.

Site Mission

Please see the menu for numerous articles of interest. Please leave a comment or send an email if you are interested in the material on this site.

Interested in supporting this site?

You can help by sharing the site with others. You can also donate at where there are full details.

where there are full details.