Hilbert’s Tenth Problem

In 1970 Yuri Matiyasevich claimed to have proved that the answer to Hilbert’s question was that it is impossible for there to be any such process. But is Matiyasevich’s proof rock-solid? See the page:

In 1970 Yuri Matiyasevich claimed to have proved that the answer to Hilbert’s question was that it is impossible for there to be any such process. But is Matiyasevich’s proof rock-solid? See the page:

But how rigorous is Gödel’s Proof ? Did he make some assumptions that at that time might have seemed acceptable or were overlooked, but which cannot be deemed acceptable today?

For an introduction to some questionable aspects of Gödel’s Proof see the page:

The “Axiom of Choice” is an assumption used by some mathematicians, and they justify the assumption by claiming that it gives certain results that they want to achieve.

But it also leads to the result that one sphere is equivalent to two spheres which are each of the same volume as the single sphere.

Which might be a result that someone wants if they want to engage in fantasy, but it certainly isn’t what a scientist would want from his mathematics.

For a closer look at the “Axiom of Choice”, see the page:

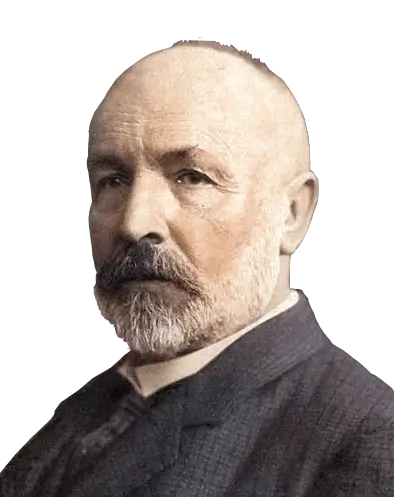

Most accounts gloss over various aspects of Cantor’s writings that can appear to us today as strange, if not manifestly incorrect.

Here we give a logical appraisal of Cantor’s methodology and thought processes that are indicated by his writings, see:

Henri Lebesgue’s answer was to assume that there are some points that have a width while all other points do not have any width.

For an in-depth examination of the contradictions arising from this notion, see the page:

An indefinable number might be said to be a number that contains an infinite amount of information that cannot be summarized by any finite definition.

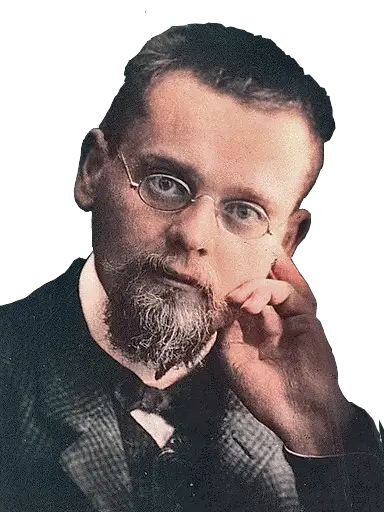

But Georg Cantor, the founder of the theory from which König’s idea of indefinable numbers arose, found the notion deeply disturbing when it was postulated, stating that ‘Infinite definitions (which are not possible in finite time) are absurdities. … Am I wrong or am I right?’

For a closer look at the notion of indefinable numbers, see the page:

As site owner I reserve the right to keep my comments sections as I deem appropriate. I do not use that right to unfairly censor valid criticism. My reasons for deleting or editing comments do not include deleting a comment because it disagrees with what is on my website. Reasons for exclusion include:

Frivolous, irrelevant comments.

Comments devoid of logical basis.

Derogatory comments.

Long-winded comments.

Comments with excessive number of different points.

Questions about matters that do not relate to the page they post on. Such posts are not comments.

Comments with a substantial amount of mathematical terms not properly formatted will not be published unless a file (such as doc, tex, pdf) is simultaneously emailed to me, and where the mathematical terms are correctly formatted.

Reasons for deleting comments of certain users:

Bulk posting of comments in a short space of time, often on several different pages, and which are not simply part of an ongoing discussion. Multiple anonymous user names for one person.

Users, who, when shown their point is wrong, immediately claim that they just wrote it incorrectly and rewrite it again - still erroneously, or else attack something else on my site - erroneously. After the first few instances, further posts are deleted.

Users who make persistent erroneous attacks in a scatter-gun attempt to try to find some error in what I write on this site. After the first few instances, further posts are deleted.

Difficulties in understanding the site content are usually best addressed by contacting me by e-mail.

“You can fool some of the people all of the time, and all the people some of the time, which is just long enough to be President of the United States.”

Spike Milligan

I have set up this website to allow a user to switch to a dark mode, but which also allows the user to revert back to the browser/system setting. The details of how to implement this on a website are given at How to setup Dark mode on a web-site.

The page Understanding sets of decreasing intervals explains why certain definitions of sets of decreasing intervals are inherently contradictory unless limiting conditions are included, and the page Understanding Limits and Infinity explains how the correct application of limiting conditions can eliminate such contradictions. The paper PDF On Smith-Volterra-Cantor sets and their measure has additional material which gives a more formal version.

How to set up a system for easy insertion or changing of footnotes in a web-page, see Easy Footnotes for Web Pages.

After comments that my PDF paper on the flaw in Gödel’s incompleteness proof is too long, I have added a new section which gives a brief summary of the flaw, while the remainder of the paper details the confusion of levels of language. The paper can be seen at The Fundamental Flaw in Gödel’s Proof of his Incompleteness Theorem.

To understand the philosophy of set theory as it is today requires a knowledge of the history of the subject. One of the most influential works in this respect was Georg Cantor’s set of six papers published between 1879 and 1884 under the overall title of Über unendliche lineare Punktmannig-faltigkeiten, which were published between 1879 and 1884. I now have English translations of Part 1, Part 2, Part 3 and the major part, Part 5 (Grundlagen). There is also a new English translation of Cantor’s “A Contribution to the Theory of Sets”.

A look at how the field of meta-mathematics developed from its early days, and how certain illogical and untenable assumptions have been made that fly in the face of the mathematical requirement for strict rigor.

The pages of this website are set up to give a good printed copy without extraneous material.

How to set up a system for easy insertion or changing of footnotes in a web-page, see Easy Footnotes for Web Pages.

After comments that my PDF paper on the flaw in Gödel’s incompleteness proof is too long, I have added a new section which gives a brief summary of the flaw, while the remainder of the paper details the confusion of levels of language. The paper can be seen at The Fundamental Flaw in Gödel’s Proof of his Incompleteness Theorem.

To understand the philosophy of set theory as it is today requires a knowledge of the history of the subject. One of the most influential works in this respect was Georg Cantor’s set of six papers published between 1879 and 1884 under the overall title of Über unendliche lineare Punktmannig-faltigkeiten, which were published between 1879 and 1884. I now have English translations of Part 1, Part 2, Part 3 and the major part, Part 5 (Grundlagen). There is also a new English translation of Cantor’s “A Contribution to the Theory of Sets”.

A look at how the field of meta-mathematics developed from its early days, and how certain illogical and untenable assumptions have been made that fly in the face of the mathematical requirement for strict rigor.

The pages of this website are set up to give a good printed copy without extraneous material.

Copyright James R Meyer 2012 - 2024

https://www.jamesrmeyer.com

Rationale: Every logical argument must be defined in some language, and every language has limitations. Attempting to construct a logical argument while ignoring how the limitations of language might affect that argument is a bizarre approach. The correct acknowledgment of the interactions of logic and language explains almost all of the paradoxes, and resolves almost all of the contradictions, conundrums, and contentious issues in modern philosophy and mathematics.

Site Mission

Please see the menu for numerous articles of interest. Please leave a comment or send an email if you are interested in the material on this site.

Interested in supporting this site?

You can help by sharing the site with others. You can also donate at where there are full details.

where there are full details.